What to do with AI hype

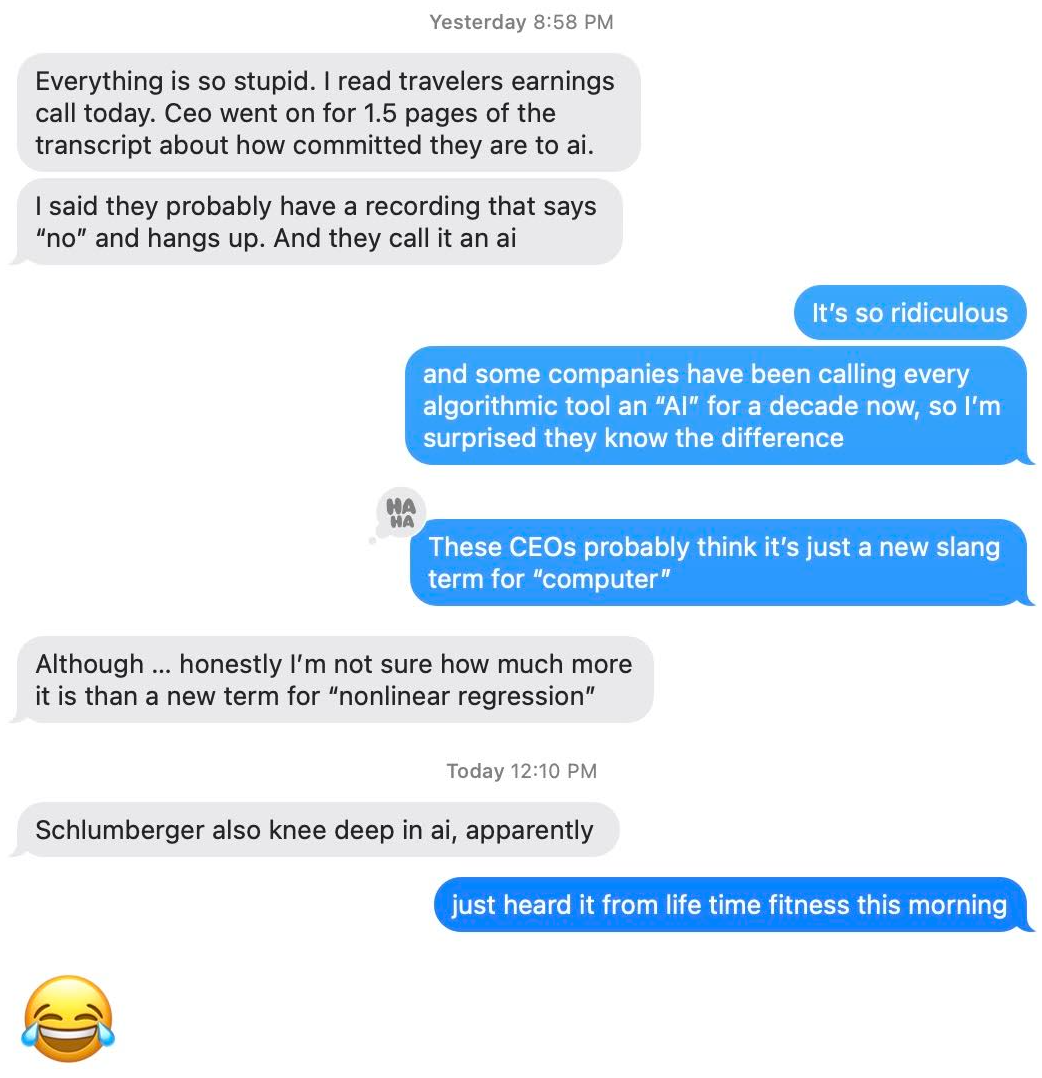

I know I'm not the only one in the stock market who's already tired of hearing about AI this year. But apart from rolling our eyes, is there anything investors should be doing with this commentary?

Obviously there are a number of businesses where this is already a very big deal, from GPUs to test prep. That's not what we're talking about here. This is about the other 95% of public companies, or rather the half of them who are giving us an "AI" story. Let's try to break these stories down into a few basic types:

Revenue optimization

This includes any type of dynamic pricing, personalized marketing, or the Life Time Fitness subscription hikes that I was referencing in the exchange above:

When you look [at] the new member rack rate vs. the people who have joined earlier with lower dues… if everybody was at that rack rate, right, [it] would be $17–18M/month. And I'm glad to cover this on this call, because there is no way, at any given time, that we will ever do such a thing. Because that would be like brand suicide. However ,what we do have is [an] opportunity to continually pass on a slight amount of dues increase very systematically, with a complete, sophisticated use of AI, where we know that it doesn't [affect] the satisfaction or the attrition rate…

Hopefully no one reading this is scandalized that their gym renewal rates may be individually targeted, because this is how many services have worked for a long time. If anything, it's refreshing when management is this upfront about it on earnings calls. And for investors who want companies to maximize revenue, it doesn't really matter whether they call these algorithms "AI" or anything else.

But with any kind of revenue optimization, the key phrase for investors to remember is "diminishing returns." In a market that's even somewhat competitive, every new trick is targeting a smaller incremental gain than the previous trick. The risk of new tech is not that it won't work; it's that companies will be tricked into increasing their investment in these systems as the incremental returns tail off.

Agency laundering

This is a close cousin of revenue optimization, but it applies to cases where a black box algorithm or "AI" is doing things that the business would be unwilling to have humans do. Maybe it's discriminating against customers in a way that risks regulatory action or bad publicity. Maybe it's colluding with competitors' algorithms in a way that two human employees would not be allowed to do. Or maybe it's just doing things that employees would be unwilling to do…

Again, we're not here to judge. But for any company that would never dream of doing these things — fine, then it's just revenue optimization, right? And investors should expect diminishing returns.

For any CEO who's being told by their employees or vendors that they can have it both ways, or any investors who believe it from CEOs — i.e. that a new consumer-facing revenue optimization tool will deliver higher returns on time/capital than all the previous optimization tools… without doing anything anticompetitive or otherwise unsavory… the term for that is not "AI," it's "magic."

Cost reduction

Again, we need to separate consumer-facing use cases and look at them first. For example, one easy story across many sectors seems to be that "AI" customer service bots will be a dramatic leap forward from every previous round of customer-facing chatbot, phone tree, etc.

And again, the core problem is that there's already been so much previous "optimization"… well, let me give you an example. A couple weeks ago, I had a funny customer-service interaction with a large online retailer. The problem was simple — order lost in shipping, needed to be re-sent — and the chatbot solved it in about 30 seconds. But at the very end, it hit an unlikely hurdle — my card on file had just expired — and it had to punt me to a human.

All they needed was my new card info, but five minutes later, the human support rep wasn't even close to solving the issue, and you can imagine why. First, they had to go through their whole script about how happy they were to have me as a customer. Then, for whatever reason, they were unable to read the previous chat, and had to start over and ask me all the same questions…

Now, I don't blame the human rep for this at all. I think the problem is that customer support has been automated and systematized and cost-engineered over and over, decade after decade… and at this point, companies have gotten so good at forcing their human employees to act like bots that a few of the actual bots have started to pull ahead.

But that's not an "AI" story, right? It's a pre-AI story. And if that company was telling investors that their new GPT chatbot would be more humanlike, I wouldn't even know what they meant. Remember, this was a case where I've been trained to want the bot, and I would now trust it less if I thought I was talking to a human.

So for a consumer-facing use case where the AI is taking out costs, rather than optimizing revenue, I'm not even sure I buy the diminishing returns argument. Once you fully account for the costs, and honestly measure and attribute all the impacts, I'd be surprised if many of these projects still have positive returns at all.

B2B/internal

I saved B2B pricing and internal cost reduction projects for this last section, because that's where I would expect the most effective AI use cases to emerge first.

As an internal example: even the largest public REITs often have relatively lean headcounts, and they have to do things like reviewing hundreds of broker offering memorandums, some of which are full of lies less accurate than others… or as a B2B example, if a chain retailer said they were paying above-market rents at many of their stores, or above-market pricing to many of their vendors, and a new AI-driven system would be faster and cheaper than the specialized consulting firms that they currently hire to scrub these problems…

I'm just speculating here, but these are the kinds of AI use cases that I would find more plausible. The distinction from the B2C examples is that we're talking about less granular systems with fewer eyes on them, often subject to much less market pressure over time, making or supporting chunkier high-dollar decisions… this is where the average public company might expect to find some low-hanging fruit on the AI tree.

But even here, it's important for investors to be as clear and specific as possible about costs and benefits. And even here, we shouldn't assume more than a few years' lead on competitors, because there's nothing inherently proprietary or defensible about these advantages.

Look, a few years' lead can be worth a lot — and there's also nothing wrong with investing in those diminishing returns B2C use cases, as long the incremental investment is low enough, right?

But almost by definition, the greatest AI breakthroughs for non-tech companies will be the ones that integrate these new tools with other proprietary innovation, in a way that's not easily replicated by competitors. At this point, it feels too early in the development of the tech to even know what that might look like. So in a sense, the more a business truly believes in the potential of AI, the more they should want to wait to invest in it, rather than committing too early to the wrong applications.